玄机

这次行业赛考到了应急响应。开个文章记录一下应急响应相关的知识。

主要是以玄机上面的题为主,不得不说,玄机的题是真的贵啊!

应急响应之公交车系统应急排查

题目描述:

1 | 本环境侧重于流量分析,脚本编写,webshell检测,混淆代码检测(思路通用)仅供学习参考 |

步骤1

分析环境内的中间件日志,找到第一个漏洞(黑客获取数据的漏洞),然后通过分析日志、流量,通过脚本解出黑客获取的用户密码数据,提交获取的前两个用户名,提交格式:flag{zhangsan-wangli}

主要是分析Web中间件(比如Apache/IIS/Nginx)的日志,这里进入到/var/log下面就发现了apache,然后进到里面看日志,非常多的数据,几千条,找了两条观察发现是用的base64编码的payload,并且还是时间盲注。于是让AI去写了一个分析脚本,来得到盲注爆破得到的数据库名、表名、字段名、以及值。

1 | 172.17.0.1 - - [23/Jul/2025:03:16:15 +0000] "GET /search.php?query=KSBBTkQgNTk5NT1DQVNUKChDSFIoMTEzKXx8Q0hSKDk4KXx8Q0hSKDEwNyl8fENIUigxMTMpfHxDSFIoMTEzKSl8fChTRUxFQ1QgKENBU0UgV0hFTiAoNTk5NT01OTk1KSBUSEVOIDEgRUxTRSAwIEVORCkpOjp0ZXh0fHwoQ0hSKDExMyl8fENIUigxMTgpfHxDSFIoMTEyKXx8Q0hSKDExMil8fENIUigxMTMpKSBBUyBOVU1FUklDKSBBTkQgKDM2MTc9MzYxNw%3D%3D HTTP/1.1" 200 1307 "-" "sqlmap/1.9#stable (https://sqlmap.org)" |

如果是线下断网,这脚本自己估计很难写出来,不得不感叹AI的强大,贴上:

1 | import re |

得到输出:

1 | { |

所以提交的flag为:flag{sunyue-chenhao}

步骤2

黑客通过获取的用户名密码,利用密码复用技术,爆破了FTP服务,分析流量以后找到开放的FTP端口,并找到黑客登录成功后获取的私密文件,提交其文件中内容,提交格式:flag{xxx}

这里题目给的ftp端口是2121,另外ftp数据流会有一个特征 , “successfully”,我们利用这两点来过滤一下:

tcp.port==2121&&tcp contains "successfully"

然后追踪一下tcp流,可以看到一些信息:

1 | 220 (vsFTPd 3.0.3) |

很明显可以看到一段交互的FTP流量,其中 RETR sensitive_credentials.txt 就是传输下载文件的意思。

flag为该文件的内容,通过分析这段流量可以知道文件位于 /home/wangqiang/ftp目录下;提交即可。

步骤3

可恶的黑客找到了任意文件上传点,你需要分析日志和流量以及web开放的程序找到黑客上传的文件,提交木马使用的密码,提交格式:flag{password}

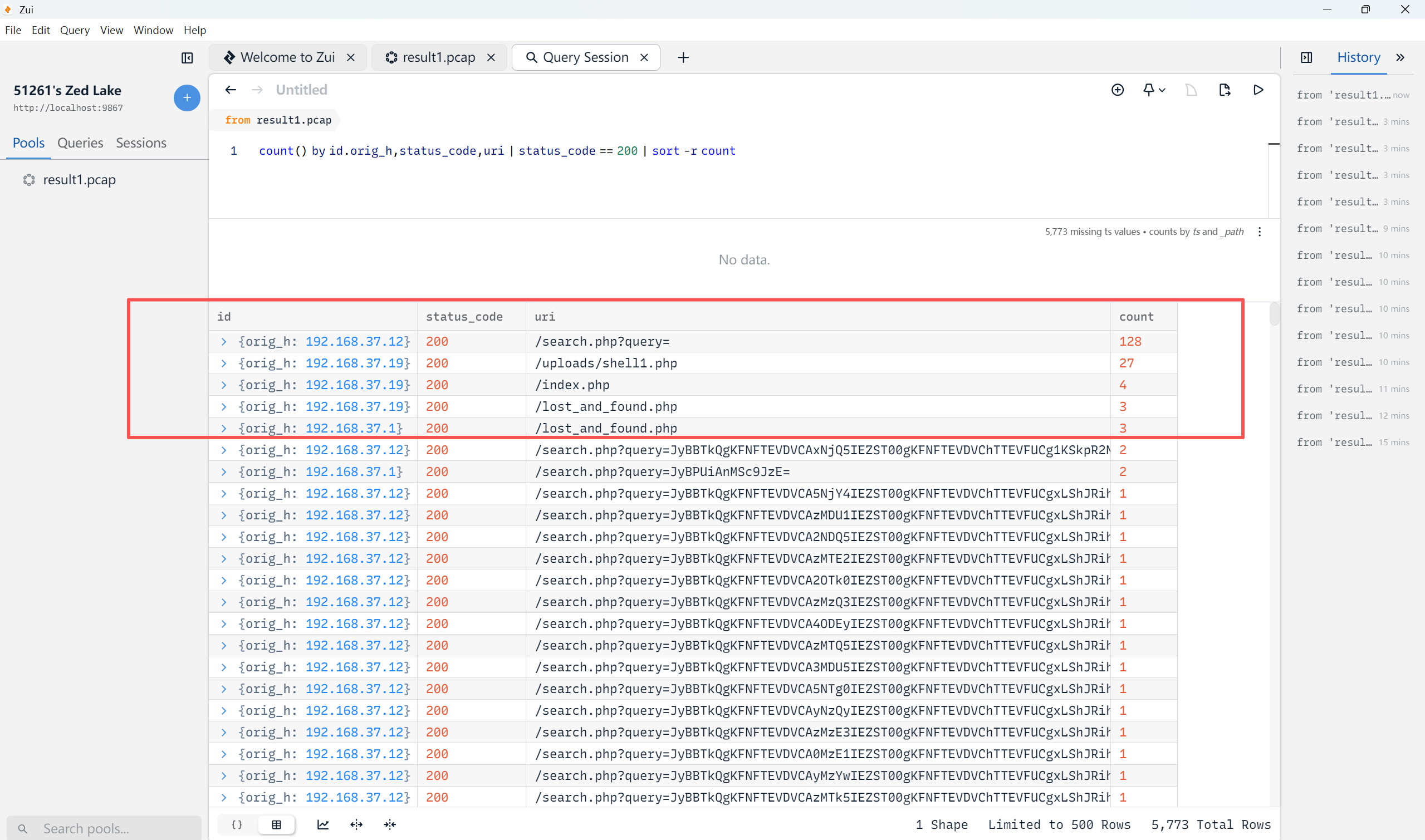

有个流量分析工具Zui,可以做一些直观展示的操作:

count() by id.orig_h,status_code,uri | status_code == 200 | sort -r count

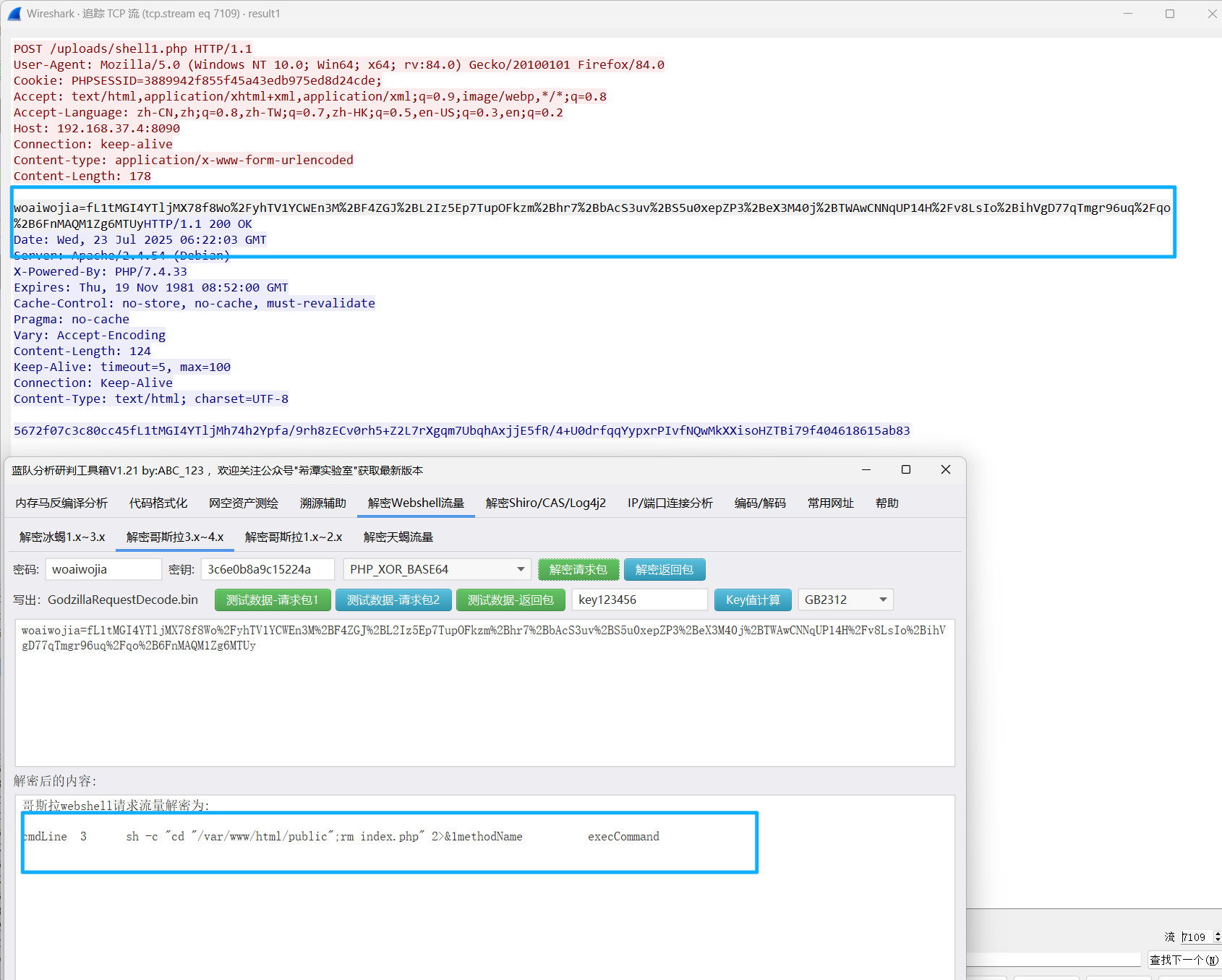

有个shell1.php,这种就很像后门,并且文件上传一般都是POST方式,所以我们可以在wireshark在进行筛选过滤一下,然后追踪一下tcp流就发现了🐎

http.request.method==POST -> 追踪TCP流

flag{woaiwojia}

步骤4

删除黑客上传的木马并在/var/flag/1/flag查看flag值进行提交

这个没什么好说的,去/var/www/html/public/uploads 目录下面把 shell1.php删除就行了。这里猜测它是有一个check机制,发现这个文件不存在就会生成一个 /var/flag/1/flag 文件,然后读里面的内容就行了。

步骤5

分析流量,黑客植入了一个web挖矿木马,这个木马现实情况下会在用户访问后消耗用户的资源进行挖矿(本环境已做无害化处理),提交黑客上传这个文件时的初始名称,提交格式:flag{xxx.xxx}

步骤三中可以看到上传的马是哥斯拉,特征很明显。并且pass和key都给了。我们直接用工具来解密后面的流量;

流7109的流量解密下发现:

把index.php删了,我们接着去跟下一个流,看做了哪些操作,翻到流7113的时候,发现把map.php移到/var/www/html/public 目录下并重名为index.php:

我们再进容器看一下index.php文件,发现一段代码很可疑:

1 | <script> |

我们扔给AI去分析一下:

1 | (function() { |

发现是个挖矿的脚本。所以map.php无疑了。

flag{map.php}

步骤6

分析流量并上机排查,黑客植入的网页挖矿木马所使用的矿池地址是什么,提交矿池地址(排查完毕后可以尝试删除它)提交格式:flag{xxxxxxx.xxxx.xxx:xxxx}

步骤五AI分析的代码已经给出矿池地址了:flag{gulf.moneroocean.stream:10128}

步骤7

清除掉混淆的web挖矿代码后在/var/flag/2/flag查看flag值并提交

直接进到index.php里面,把这段JS代码删除即可。